Turi Create - Core ML and Vision

Creating an Image Recognition App with Turi Create - Core ML and Vision

Turi Create is a Python module for building machine learning models, prominently featured in multiple sessions at WWDC18 with live demos. It allows iOS developers to focus more on API functionalities when using the Core ML module.

Creating an Image Recognition App with Turi Create - Core ML and Vision

“A person’s hand holding a camera lens over a mountain lake” by Paul Skorupskas on Unsplash

Turi Create, a Python module for machine learning model development, was extensively demoed live at WWDC18. It simplifies the use of Core ML for iOS developers, allowing them to concentrate on APIs. Moreover, if you have basic ideas, you don’t need to seek an ML expert or become one to create a preliminary ML model for prototyping and further enhancement.

During WWDC18 Session 712, Turi Create was highlighted extensively. For more details on that session, you can refer to the following Medium article:

Let’s start by demoing the APIs used in Turi Create and how they are implemented. Our example will involve determining if a photo contains Takeshi Kaneshiro. The sample video is available on YouTube and includes confidence levels alongside the identity checks.

Confidence levels indicate how confident your model is about its results, with a range between 0 and 1.0. If it determines a photo is not Takeshi Kaneshiro with a confidence level of 0.97, it means the model is 97% certain of its judgment.

Before doing image classification, you need image data. You should create folders for each category and fill them with corresponding images. The more data, the better, but at a minimum, it’s suggested to use at least 40 images per category.

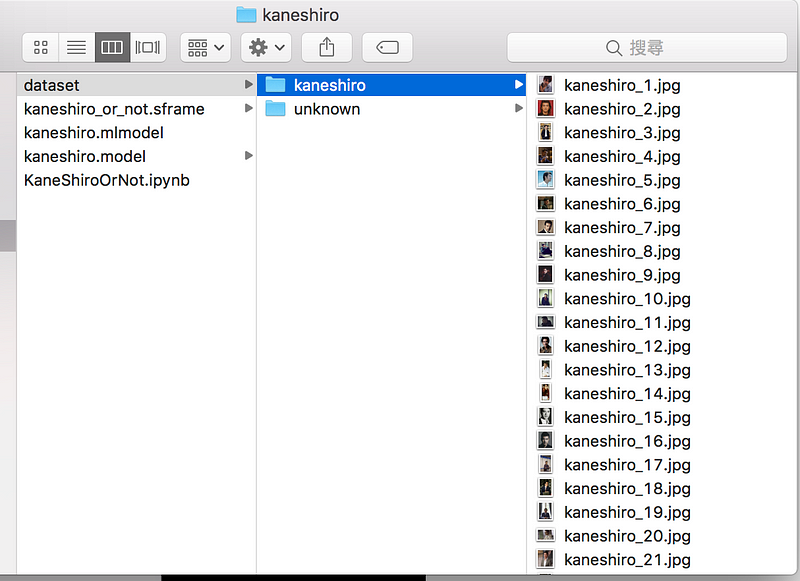

As shown below, I searched online for 40 images of Takeshi Kaneshiro and placed them in a “kaneshiro” folder. The challenge is determining what to include in the “non-Kaneshiro” data. For this example, I used several non-Kaneshiro portraits for demonstration purposes. When undertaking such projects, it’s advisable to consult with data science professionals on how best to train your model.

“Kaneshiro” images in the dataset, “unknown” contains images that are not the target.

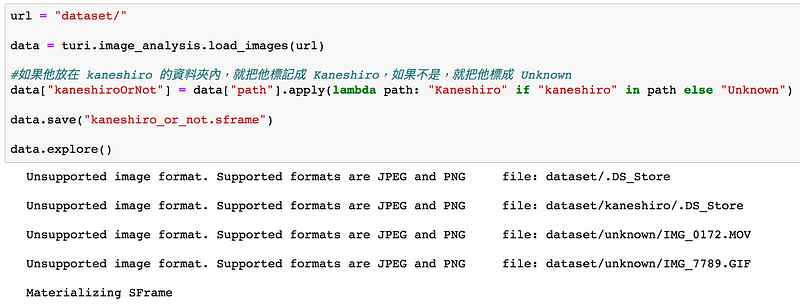

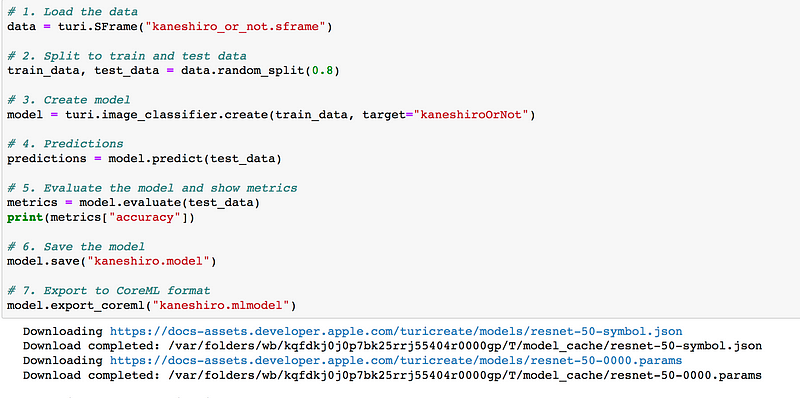

Once your data is organized, you can use the following code to train your model and export it to Core ML. The amount of code required is minimal, only about 12 lines (excluding comments). You can download the Jupyter Notebook file directly from (Ref-1).

Turi Create smartly handles file reading. If it encounters an unsupported file type, it will issue an error message but continue executing. The following image demonstrates this behavior where a .DS_Store file, used by macOS for storing custom attributes of a folder, is ignored.

If this is your first time running the Jupyter Notebook, the process will connect to Apple’s model website to download necessary data.

Here is the output during model training in Turi Create:

Analyzing and extracting image features.

Number of examples: 74

Number of classes: 2

Number of feature columns: 1

Number of unpacked features: 2048

Number of coefficients: 2049

Starting L-BFGS

Turi Create also provides helpful tips if the model may not be optimal, suggesting adjustments such as increasing max_iterations if necessary.

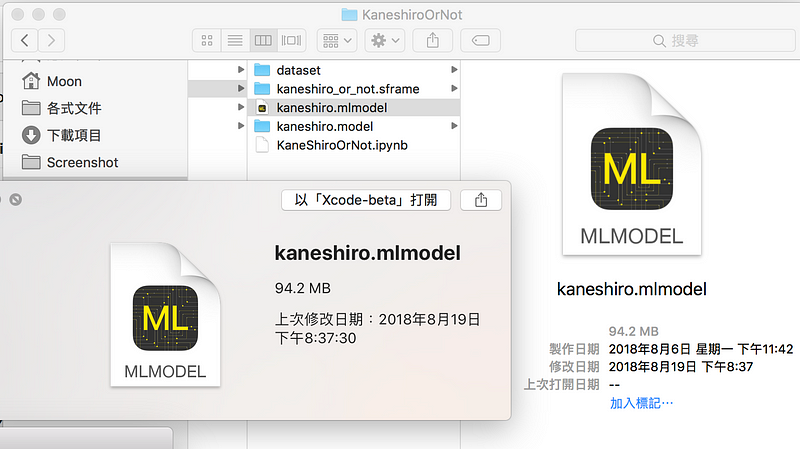

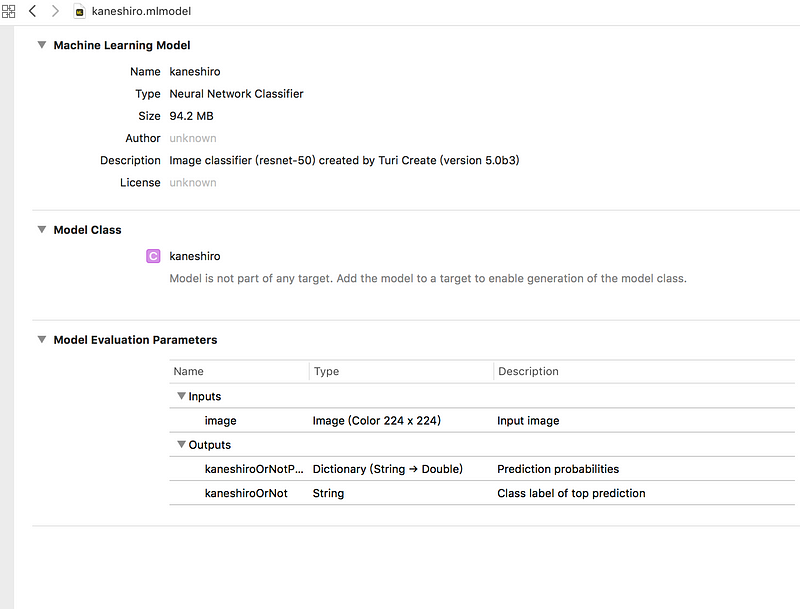

After the process, you will obtain an .mlmodel file, which includes details about the training model, inputs, and outputs. For this project, the outputs are a label of “Kaneshiro” or “Unknown” and a dictionary of confidence levels for each label.

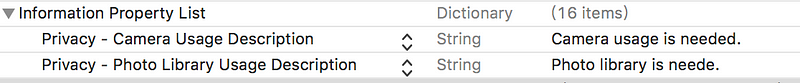

Finally, integrate the model into an app project. Ensure to adjust privacy settings in info.plist if your app uses the camera or photo library.

The complete project, including XCode files and Turi Create code, is available on the GitHub repository linked below.

Ref-1: GitHub repository

Ref-2: App Coda tutorial on using Python and Turi Create for custom Core ML models

By Marvin Lin on August 20, 2018.